RAGnarok: How AI Agents are Transforming Financial Work through RAG and Tool Use

We dive deep on the technical specifications for an Agent system for Finance and what we learned building the world's most advanced RAG pipeline for financial queries.

Decisional (An AI Agent for Deep Financial Work) went from 29/150 to 119/150 on Financebench over the past three months (primarily without using o1) through various optimizations on RAG and Tool use. This post highlights the learnings and what you can do to achieve 4X boost in accuracy.

Why RAGnarok?

Ragnarok signals the end of an old world and the start of a new one. For the world of Finance, the explosion of unstructured data - PDFs, earnings calls, market news - is its own form of “data Ragnarok”. This chaotic information overload needs an army of analysts to sift through. Unlike the myth however, it isn’t the end but the start of a new era - the bionic financial professional powered by AI.

As firms and individuals started their explorations with AI - the most common problem they arrive at is hallucinations and lack of context with AI. These are problems that are addressed with Retrieval Augmented Generation architectures that help make an AI system stateful and grounded in order to be able to deal with thousands of pages of complex documents and excel files. However these problems multiply when you need to create Agent system that chains multiple tools and actions together.

You will find this post helpful if you are trying to apply any of the following technologies to your AI stack:

RAG

Agents

LLMs & VLMs

Vector Databases

Evals

Document Ingestion & Parsing

AI Agents need to be built on top of a strong cognitive architecture

RAG - A crucial Tool in the Agent toolkit

Deep learning is a field inspired by biology, with our brain being the most efficient learning system that we started trying to mimic. This biomimetic approach was revolutionized through the contributions of pioneers like Geoffrey Hinton, & Claude Shannon. Similarly an AI Agent system should be mimic an expert financial analyst. This means you need to give it Tools that it can use to replicate human analyst behaviour or skills. At the same time introducing too many tools or making a tool complex really affects the ability to be able to successfully chain together a series of tools to achieve complete a workflow.

A Tool is a specialized component within an AI Agent system that replicates a specific capability or function. Tools are designed to be simple enough to chain together effectively in workflows, while being sophisticated enough to handle complex tasks like RAG queries, data extraction, or table parsing.

EVOLUTION IN COMPUTING USHERING IN THE AGE OF THE AGENT

Core Tools in the AI Financial Analyst stack

An AI Agent for Financial work is built with a Cognitive Architecture that resembles the mental models of a good financial analyst

Ability to read widely - A good analyst is not constrained by context windows but can go through thousands of pages of information making notes and summaries along the way to build a strong mental model.

We built our AI Agent in a way that can read thousands of documents and is not limited to the context window of an LLM supported by RAG.Ability to read deeply - For some information you need to be able to go through every piece of information, i.e read deeply.

The AI Agent must be able to deeply analyse any page of a document including the diagrams and tables and not be limited by OCR or text layer extraction.Be Quantitative - Keen eye on metrics instead of ideas.

We built a metric index that specifically tunes answers on numbers linked to entities within documents and not lose context of important metrics that help guide financial analysis.

"In my whole life, I have known no wise people who didn't read all the time -- none, zero." - Charlie Munger

Decisional’s Agent Tools Inspired by Experts

Based on this Decisional built the following Tools in order to be able to support these expert financial analyst capabilities better:

Extract Custom Table - A tool that is able to pass a schema of a table and fetch the outputs in a format that is predefined

Query - Runs a RAG workload across a set of pre uploaded data sources. Combines search and synthesis over thousands of data sources.

Extract Chunks - A tool that is able to deeply parse a data source into chunks of text that is contextual (i.e specifies graphs, charts, tables and other important context)

Building the Agent

Decisional Cognitive Architecture

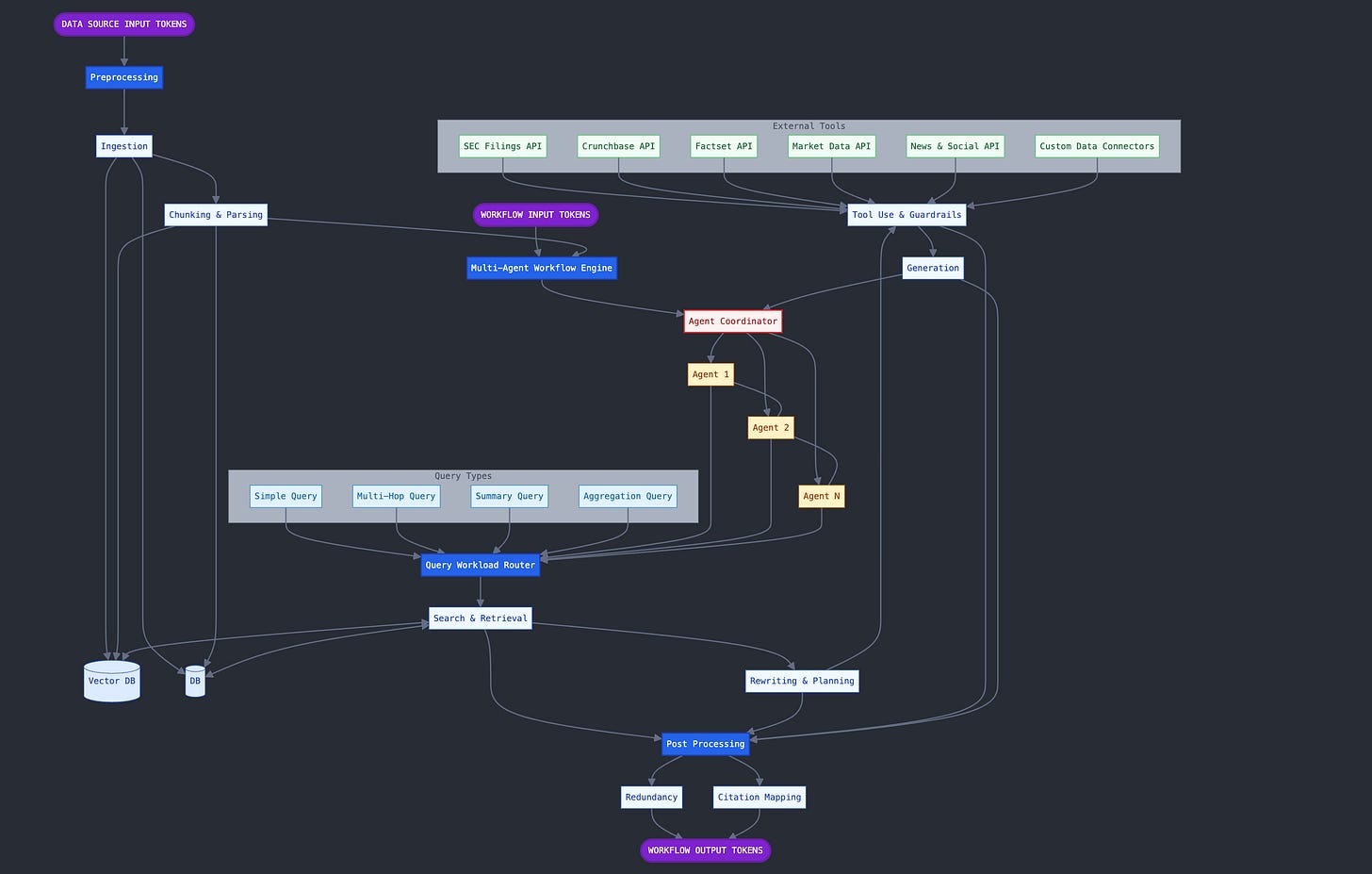

The goal of the Decisional’s Agent System is to take two kinds of input tokens:

Data Source Input Tokens

Tool Run Input Tokens

The Data Source input tokens are ingested and preprocessed so that it can be used at Tool run execution time where a new set of tool run input tokens are passed on to the system in order to be able execute a workflow.

Since the tool run execution and data source preprocessing is decoupled it is possible to use sophisticated Vision Language Models to process the each data source independently instead of just text layer extraction.

When the workflows are executed the Agent system has the ability to make multiple tool calls as well as more sophisticated techniques to refer to the data sources using a query router in order to analyse the underlying data sources.

Specific Optimizations that led to a 4X increase in performance

Garbage In, Garbage Out: Unlike systems that rely solely on text layer extraction and basic chunking strategies, we developed custom ingestion pipelines that preserve the context of images, diagrams, and tables - crucial elements in financial documents that often contain key insights. Our chunking strategy involves using LLMs to intelligently partition a page and create detailed, specific chunks. We also attach document and section level metadata for each chunk - an enhanced version of Anthropic’s Contextual Retrieval.

Metric-Aware Parsing: We built a specialized metric index that is used during ingestion and inference that helps LLMs maintain precise context of numerical data. This ensures that critical financial metrics aren't lost or misinterpreted during analysis.

Sophisticated Query Planning: Our system excels at complex financial queries through advanced query rewriting and planning, particularly for multi-hop queries that require broader search and retrieval patterns.

Time-Aware Reranking: Financial data is inherently time-sensitive. Our reranking system labels all metrics with timestamps to maintain temporal context and relevance.

Design Tools to be modular and obvious

The Anthropic blog has good advice here:

Put yourself in the model's shoes. Is it obvious how to use this tool, based on the description and parameters, or would you need to think carefully about it? If so, then it’s probably also true for the model. A good tool definition often includes example usage, edge cases, input format requirements, and clear boundaries from other tools.

Source [4] - https://www.anthropic.com/research/building-effective-agents

Modular tool design in financial AI systems follows the principle of specialized components working together seamlessly. Each tool functions as a focused expert - like a table extraction tool that excels at parsing financial statements but doesn't attempt sentiment analysis. This specialization enables tools to be chained together effectively, similar to how analysts collaborate on complex projects. By keeping interfaces simple and predictable while maintaining robust error handling, these modular tools can be combined into sophisticated workflows while remaining easy to test and validate individually.

Monitor Tool Performance

Tool performance is fundamental - even more critical than overall workflow effectiveness. Our evaluation strategy operates on two levels: First, we validate against Financebench [3], an open-source suite of 150 finance-specific questions that provides standardized performance metrics. Our DRAG system achieves a score of 119, demonstrating a 4.1x improvement over baseline implementations.

However, excelling at public benchmarks is just the starting point. The true test comes from developing custom evaluations that mirror real customer use cases, ensuring our tools perform effectively in practical scenarios. This combination of standardized testing and customer-specific evaluation helps us build tools that are both technically robust and practically valuable for financial analysis.

Conclusion

The financial industry's "RAGnarok" represents not an ending but a revolutionary beginning, where AI and RAG technologies are transforming how we handle the overwhelming volume of unstructured financial data that previously required massive human effort to process. This shift marks the emergence of a new kind of financial professional - one augmented by AI capabilities.

Our progress is both measurable and meaningful, with systems like DRAG showing a 4.1x improvement over baselines. However, the true value lies not in benchmark numbers but in how these tools are enabling financial professionals to process, synthesize, and act on information at scales previously impossible, while maintaining the crucial human element in decision-making.

The future of financial analysis will be built on the foundation of modular, specialized AI tools working in concert with human expertise. By focusing on augmentation rather than replacement, and maintaining strict principles around tool design and evaluation, we're creating systems that amplify rather than substitute human intelligence in financial decision-making.

[1] https://stratechery.com/2024/nvidia-waves-and-moats/

[2] https://arxiv.org/pdf/2406.04744

[3] https://arxiv.org/abs/2311.11944

[4] https://www.anthropic.com/research/building-effective-agents